Strap in, because Deep Fake Neighbour Wars will lead us to an information apocalypse

Famous faces are manipulated into dreadfully unfunny skits in this AI-driven new comedy series. Luke Buckmaster says the consequences of deep-faked disinformation are no laughing matter, either.

By now most people probably have a broad understanding of the term “deepfake” and have observed drizzles of the technology in action. Probably the meaningful, high impact stuff—like Tom Cruise dancing in a dressing gown or Keanu Reeves foiling a robbery. Many new technologies begin with innocuous gimmicks as they find their shape and form. This was the case with motion pictures, the earliest short films typically capturing gimmicky spectacle (dancing, sports, magic tricks etcetera) rather than telling stories per se. All that arty Citizen Kane stuff, and those MCU movies that go on forever, came much later.

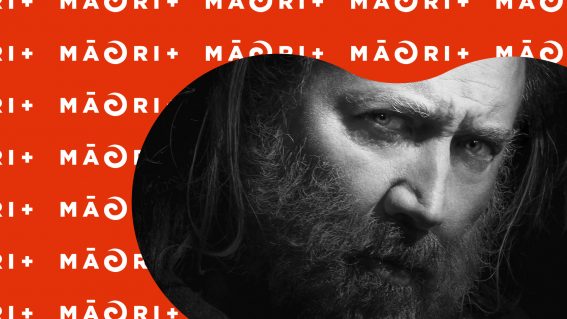

It’s sad, and kind of pathetic, that one of the first narrative TV productions to integrate deepfakes as a core feature is so spectacularly not worth your time. Deep Fake Neighbour Wars is a lame comedy show loosely presented in the format of a reality TV program, based in a world where celebrities have normal jobs and chuck wobblies at their neighbours just like actual people. Famous folk going to war include Idris Elba versus Kim Kardashian; Usain Bolt and new wife Phoebe Waller-Bridge-Bolt versus Rihanna; and Greta Thunberg versus Conor McGregor and Ariana Grande.

All have been deepfaked. Actors play them and provide voices, their faces swapped out during post-production. The show kicks off in south London, where Elba lives in a ground floor flat and is furious about having to share a community garden he fastidiously maintains with Kardashian, who has just moved into the space above him. In this alternate reality Kardashian is a bus driver with a longheld dream of snagging a particular scenic route. This is supposed to be funny because, um, Kardashian would never drive a bus?

Elsewhere, Thunberg, now a single mother, has moved from Sweden to Southend-on-Sea to escape the cold weather, only to encounter Christmas in July decorations erected by new neighbours McGregor and Grande. She goes to war, incensed, believing they called her a ho in a letter they signed off with “ho ho ho” (are you laughing yet?). Watching Thunberg overreact is funny because, um, the real Thunberg has a history in overreacting to small things that really don’t really matter, such as earth’s sixth mass extinction and the collapse of the planet’s life support systems.

I could go on about why this show is as funny as a poke in the eye with a sharp stick. All eyes however will be on the deepfakes. Pedants will pause, zoom in and say “this isn’t right” but everybody should agree that the technology is very impressive. The show, while crummy and cringey, is significant in two respects. Firstly as a historical document capturing its largely innocuous application in the present moment. Secondly as a forward-looking hypothesis, more of an open question really, about where it’s all heading. This aspect is not seriously explored or even contemplated by the show—but it’s a question on the tip of everyone’s tongues, so I’ll dive in.

The future of deepfakes is a tangled and speculative discussion, perhaps more befitting of an PHD thesis than an article on a movie and TV website. So let’s focus on just two possible directions, one more innocuous than the other.

The first is that the technology will be widely deployed by filmmakers to return dead stars back to life and accelerate a trajectory already well underway—of motion pictures as a form of animation rather than (as they were originally) a medium reliant on a camera that objectively records whatever’s in front of it. As I wrote last year, in an article arguing that Tom Cruise might represent the last generation of flesh and blood movie stars:

Despite the inevitable outcry, and pleas to return to the good old days of “real” performers, this may actually be a good thing for movies—reinvigorating the art form and accelerating a trajectory observed by Lev Manovich in his influential book The Language of New Media. Manovich argued that digital artistry changed the essence of the movie medium, which can “no longer be distinguished from animation” and became, in effect, “a sub-genre of painting.”

Want to see a new Top Gun adventure, starring Tom Cruise in his heyday, with Bette Davis playing his love interest and Humphrey Bogart his mentor? The possibilities are endless. Those who balk at the idea of virtual performers ignore that the very essence of motion pictures is illusionary.

Ever since Louis Lumière premiered his film of a train arriving at a station, cinema has been entirely about fooling the senses, from the process of projecting still images in rapid succession (providing the impression of movement) to countless codes and conventions developed to maintain the ruse. The question of emotional truth is always more important than trivial matters about whether what we see in an artistic work was ever actually, physically there.

That’s the utopian reading, even if the above might not be your jam. Now for the icky stuff. Much of the worry around deepfakes concerns their capacity to be used deceptively and maliciously. For instance, what if a deepfake video is engineered, convincingly displaying a world leader declaring war or launching a nuclear weapon? Or, on a smaller scale, a video is confected that shows a celebrity saying or doing something terribly offensive? Governments and media organisations will scramble to configure new ways of determining the veracity of video footage. Things will get weird.

But the greatest danger, in the words of scholar Mika Westerlund, is “not that people will be deceived, but that they will come to regard everything as deception.” Referring to a phenomena assigned the not-at-all concerning name “information apocalypse,” this involves a tonne of potential consequences far eerie than any episode of Black Mirror. The knowledge that anything we see and hear might be disinformation is another way of saying that everything we see and hear might be disinformation: potentially leading to the view that nothing is to be trusted.

Knowing how crap humans are at this kind of stuff, a likely outcome will be that, instead of diligently sorting fact from fiction, people will pick what they consider “real” according to what they want to believe—content that affirms their pre-existing perspectives and biases. We’ve seen some of this recently in the shocking number of Americans who believe Donald Trump’s lie that the 2020 US presidential election was stolen, and climate deniers who’d prefer to listen to Joe Rogan over scientists, because geez those woke lefties just keep harping on about the environment. In the future, they’ll have plenty of deepfaked videos to “prove” their point.

All of which, cor blimey, takes us a long way away from squabbling neighbours going toe-to-toe over community garden space in south London. If this terrible future comes to pass, maybe we’ll grow nostalgic for those old deepfakes showing celebrities dancing in dressing gowns and botching robberies. Nobody, however, will ever get nostalgic for Deep Fake Neighbour Wars: it’s too dumb, too awful, too monstrously unfunny.